Cyberwarfare / Nation-State Attacks , Data Loss Prevention (DLP) , Fraud Management & Cybercrime

Yes, Mark Zuckerberg, You've Really Messed Up Another One

Facebook CEO Pledges Changes, But User Outrage Continues

"We really messed this one up."

See Also: The Cybersecurity Swiss Army Knife for Info Guardians

That's Facebook CEO Mark Zuckerberg writing in 2006, addressing concerns that the social networking company bungled privacy controls when it launched its News Feed.

Twelve years later, Zuckerberg could have written the same line again following the uproar over the acquisition of up to 60 million Facebook profiles by voter-profiling firm Cambridge Analytica (see Facebook and Cambridge Analytica: Data Scandal Intensifies).

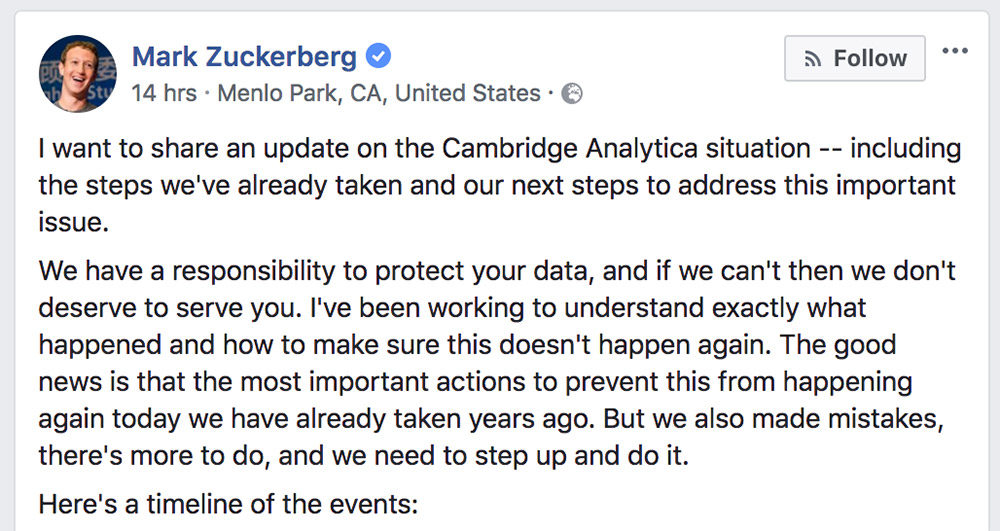

On Wednesday, Zuckerberg broke five days of silence as pressure intensified on Facebook to account for what happened. In a lengthy post, Zuckerberg pledged to make changes to better protect personal data.

"I started Facebook, and at the end of the day, I'm responsible for what happens on our platform," he writes. "I'm serious about doing what it take to protect our community."

In an interview with CNN, Zuckerberg said he suspects efforts are underway to influence the U.S. mid-term elections in November.

"I'm sure that there's v2, version two, of whatever the Russian effort was in 2016, I'm sure they're working on that," he told CNN. "And there are going to be some new tactics that we need to make sure that we observe and get in front of."

The reaction to Zuckerberg, as well as to a separate status update by Facebook COO Sheryl Sandberg on her personal page, was not kind, to say the least.

"In my opinion you should not hide behind a Facebook post when you lead a company with the data of 2.2 billion people that many of us now fear is jeopardizing democracy at a global scale," one commenter wrote to Zuckerberg. "It's sad to have to write it this way, but so it is."

Another wrote to Sandberg: "I'm throwing up. You are not portraying the truth. FB is too big to control. Your backend technology is a mess, and you'll never be able to fix the siphoning of data that Cambridge and other predators have been sucking from FB for years."

App Changes Promised

Privacy activists have been justifiably honking their horns for a decade about Facebook's ever-changing privacy settings and whether it was transparent in communicating to users what data its platform shared, and with whom. Also of concern: The liberal access it has granted to outside app developers via its API, offering them an incredibly valuable data set on more than 2 billion users.

As the Cambridge Analytica scandal continues to unfold, the U.S. Federal Trade Commission has launched an investigation, as have state attorneys general in New York, New Jersey and Massachusetts. The U.K.'s Information Commissioner's Office and Canada's privacy commissioner are also investigating.

Cambridge Analytica obtained the data set from Aleksandr Kogan, a lecturer at the University of Cambridge. He created an app called "thisisyourdigitallife," which purported to be a personality quiz.

The app was deployed for at least two to three months in 2014. Under Facebook's developer rules at the time, the app was allowed to scrape profile information and data from the friends of someone who used the app.

As many 270,000 people used "thisisyourdigitallife," which opened the door for the collection of personal data on as many as 60 million other people without their permission.

Facebook contends Kogan lied to the company and passed the data to Cambridge Analytica, against its rules. Facebook discovered the situation in 2015, and Cambridge Analytica and Kogan then certified to the company that they had deleted the data.

But The New York Times, the Observer and Britain's Channel 4 have cited insiders saying that at least some of the data was still available.

Facebook has taken steps designed to block these types of data grabs. Zuckerberg says that Facebook limited the amount of data that apps could access in 2014. Now, as a result of the Cambridge Analytica situation, Zuckerberg says that Facebook will "investigate all apps that had access to large amounts of information before we changed our platform to dramatically reduce data access."

Facebook will request an audit of all apps that it flags, and if developers refuse, they will be banned, he writes. If personally identifiable information was misused by a developer, Facebook will also inform affected users.

The company is also promising to tighten its rules around apps. If someone hasn't used an app for more than three months, Zuckerberg says Facebook will remove access to someone's data. Also, when someone signs into an app, the app will only be able to access a person's profile photo, name and email address. Facebook will also make it clearer what apps have been used and offer an easier way to revoke their permissions.

"We'll require developers to not only get approval but also sign a contract in order to ask anyone for access to their posts or other private data," Zuckerberg writes.

Facebook's Terms: Unenforceable

Facebook has long made public commitments to privacy, and when the situation has gone south, issued the usual corporate bromides. But the technology giant seemed resistant to making drastic changes for fear of jeopardizing its ever-rising revenue.

The Cambridge Analytica situation highlights the company's fundamental and avoidable error: It trusted app developers not to misuse data, but without any means of verifying or enforcing those assurances. Once Facebook allows access to personal data, as with any leak or data breach, there's no way to reel it back.

But the data that app developers have had access to is what has made Facebook such a power in the digital advertising business. Without Facebook's intimate knowledge of its users, micro-targeting people becomes less effective, and thus, much less profitable for Facebook.

Peak Privacy Debate

Privacy debates tend to fade quickly. Most users move on and accept the utility of Facebook and the deep connections it provides to community and friends rather than how it could potentially hurt them (see Facebook: Day of Reckoning, or Back to Business as Usual?). This episode, however, seems to have inspired the fiercest, most negative responses to corporate violations of people's personal privacy since Cambridge Analytica was hired by the Trump campaign.

Facebook users also face potential long-term damage from the data losses. Many types of personally identifiable information never change. Even preferences - from political views to music to perspectives on social issues - are generally static. Hence the data that leaked through Kogan's app - and perhaps through many more under examination now by Facebook - as well as social scientists' resulting insights on individuals could float around for years or decades, providing detailed insights into how individuals could potentially be better targeted or manipulated.

Deleting a Facebook account stops future data collection and future micro-targeting, at least on the social network. But apologies and policy tweaks can't fix the past. Zuckerberg has really messed up another one.